Training Flux LoRa

This time, I will provide a step-by-step guide on how to conduct Flux LoRa Training at SeaArt, along with some helpful tips to facilitate the training process.

I will focus on training with anime illustrations, as I have a greater affinity for anime compared to realistic styles.

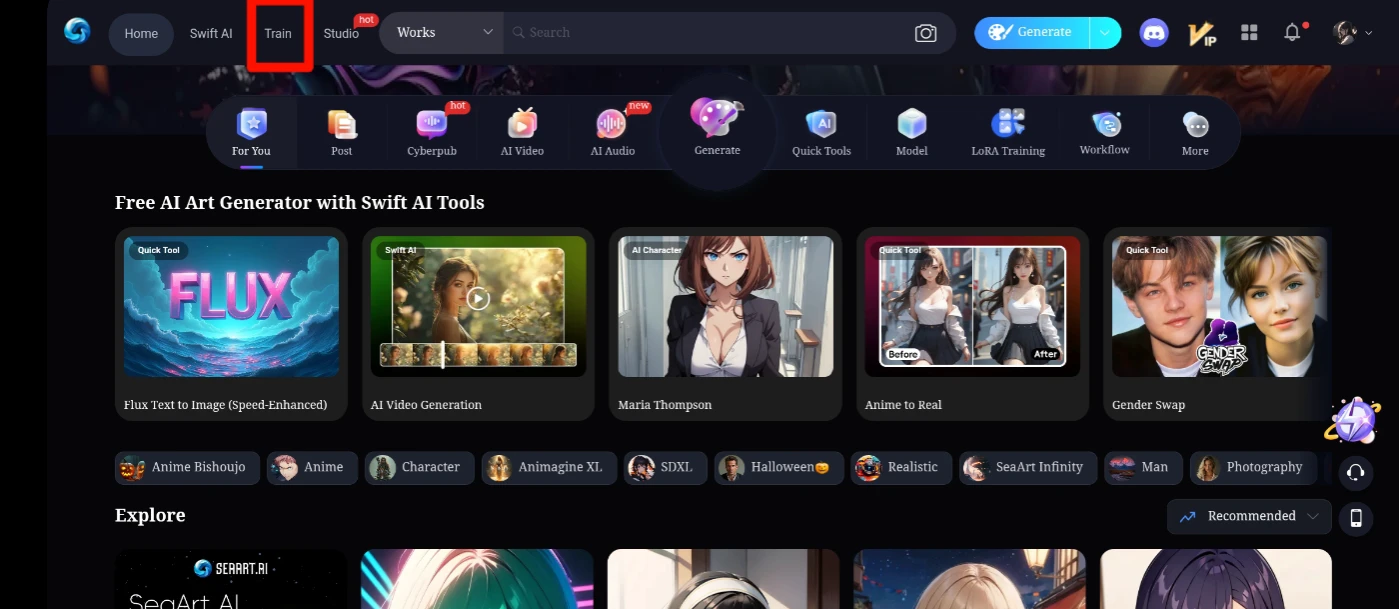

First, open the SeaArt webpage, and then click on "Train" in the navigation user interface.

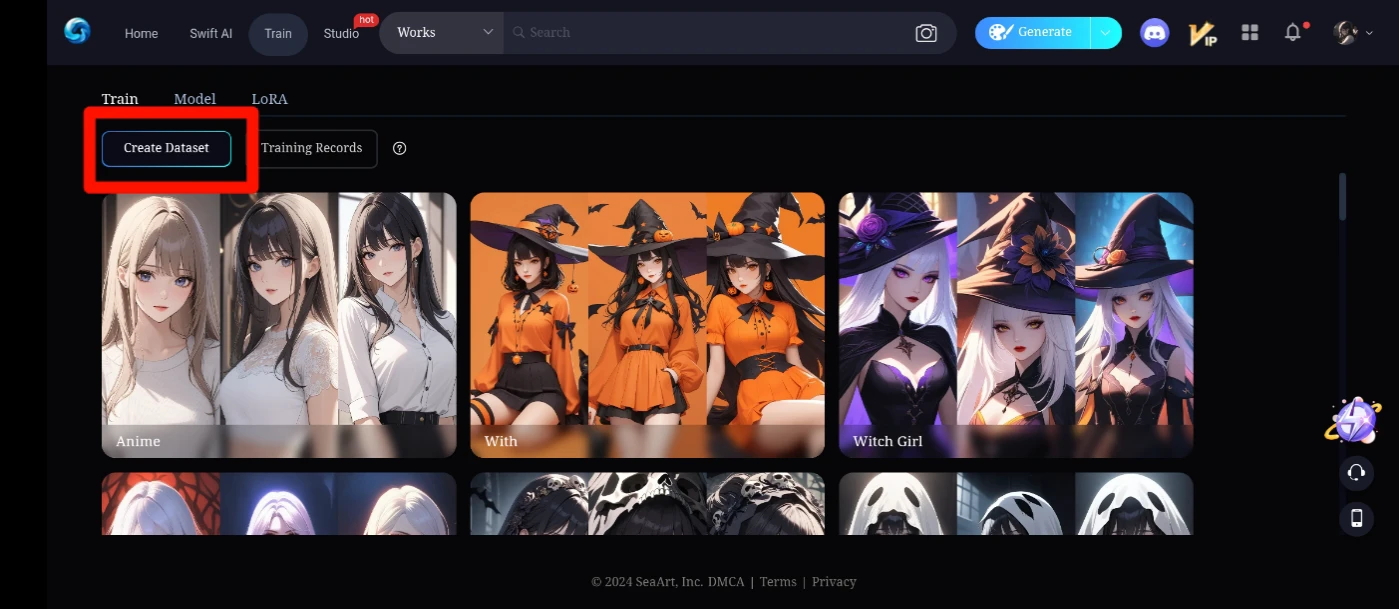

Please select "Create Dataset" to initiate the creation of a new dataset.

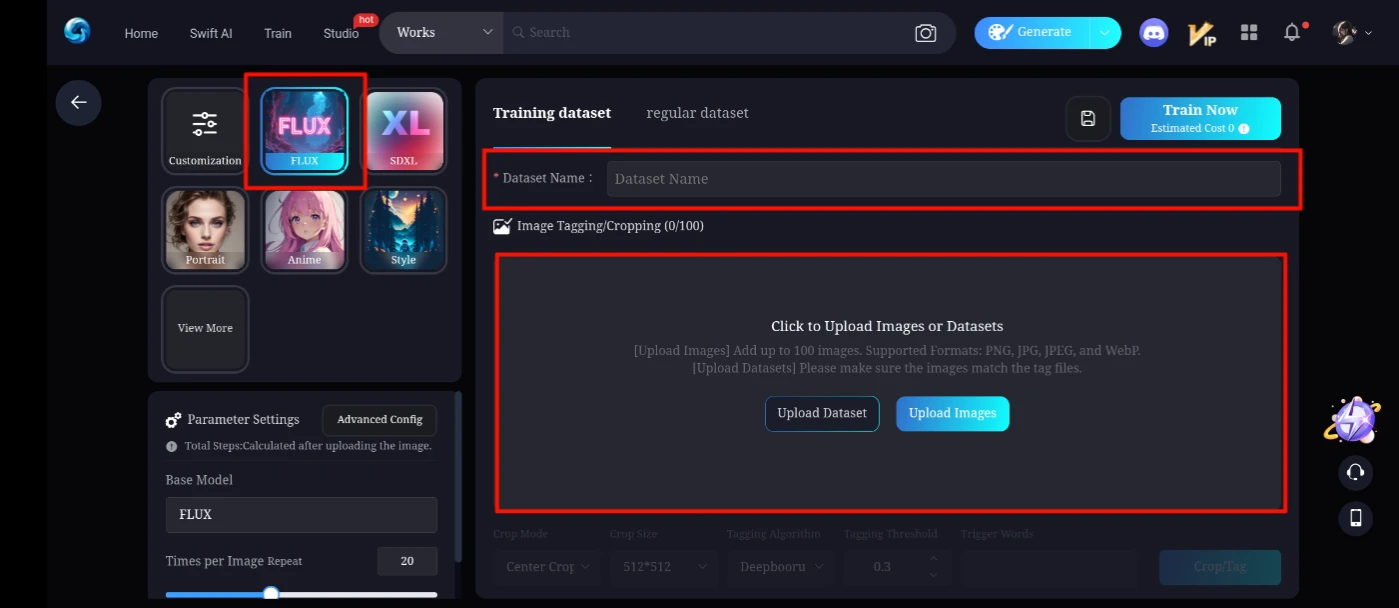

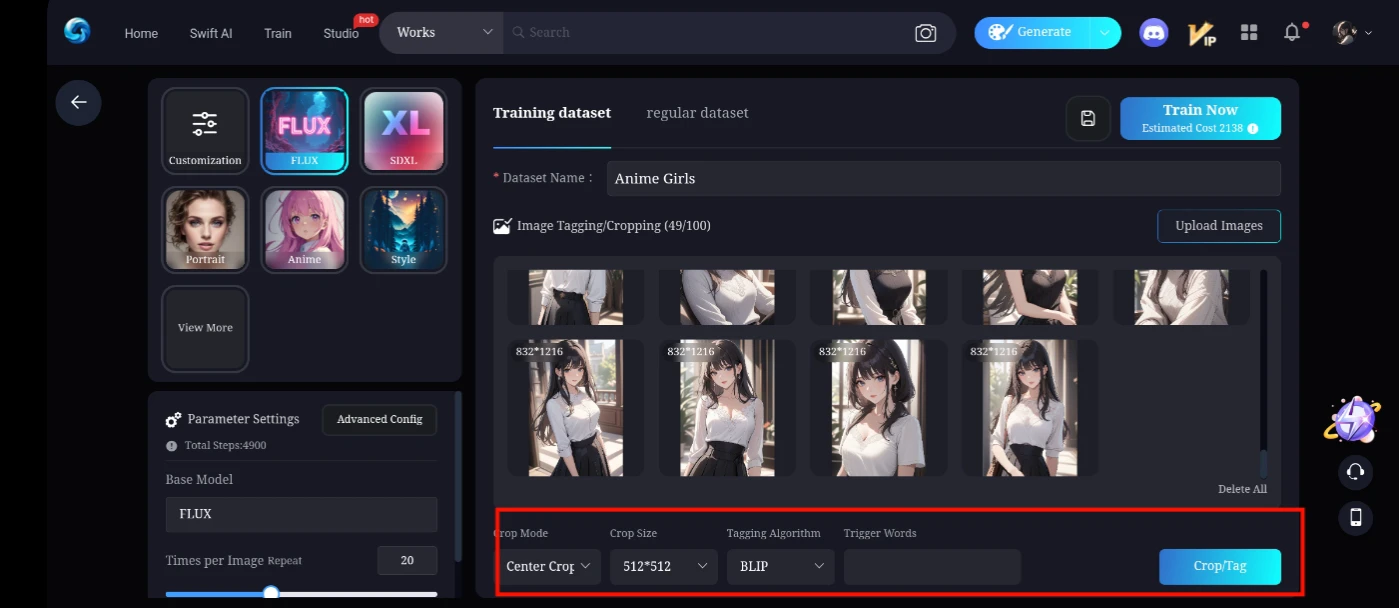

Please select "Flux" and designate a name for the dataset. Subsequently, upload the images for training by clicking the "Upload Images" button.

The recommended ratio is 1:1. If the image ratio differs, you may utilize the built-in features for cropping.Tagging recommendations utilizing BLIP can be implemented if trigger words are required; you may include them in the designated box. After that, please click on "Crop/Tag."

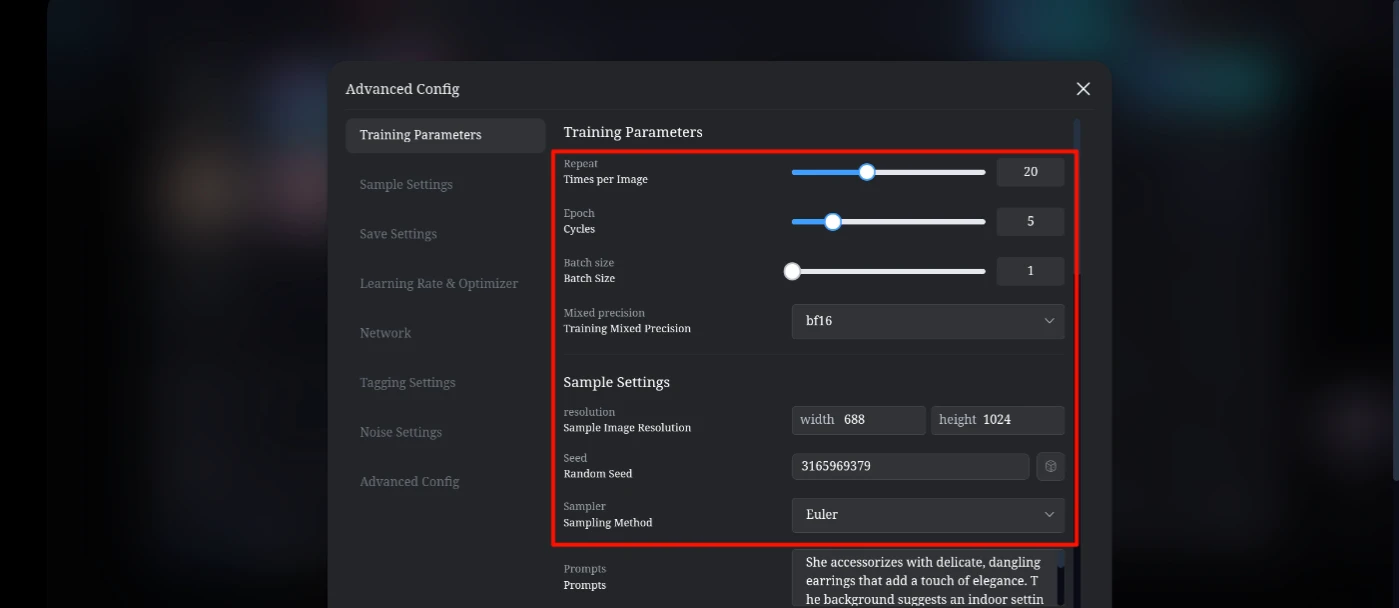

Before selecting "Training Now," I will outline several parameters by navigating to the Advanced Settings.

I utilize a repeat count of 20, with 5 epochs and a batch size of 1. If you upload 100 images or more than 70, you may reduce the repeat count. Since I am using 49 images, I will maintain the default settings. Should your training results not meet expectations, you can increase the repeat count or the number of epochs.

Training Mixed Precision: Please use the default setting, BF16.

Please use the default resolution for sample images, as it cannot be altered.

For the random seed, I will leave it as it is or set it to the default.

I recommend using the Euler sampler method, as it is the best option I am aware of.

positive prompts to enhance results , please include representative prompts for all images in the dataset that was created earlier.

For the negative prompt, you may either utilize the default option or leave it blank, as the FLUX model does not incorporate a negative prompt.

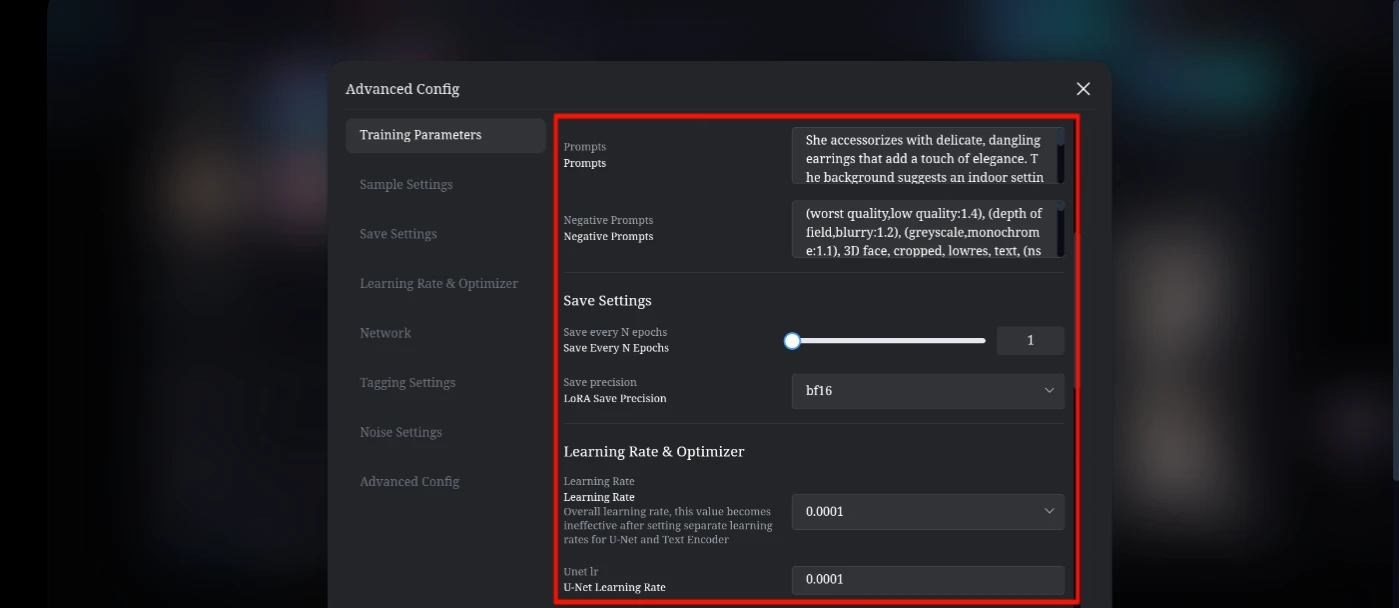

For the "Save Every N Epochs" option, you may select 1. If you are not in a hurry to save epochs, you can set it to the same number as the previously selected epochs.

For LoRa Save Precision, I will utilize the default option.

When selecting a learning rate, you may choose to use the default option (0.0001) or opt for a slower rate. However, if you decide to use a slower learning rate, please ensure that you increase the number of repetitions accordingly.

For the U-Net model, I utilize a learning rate of 0.0001.

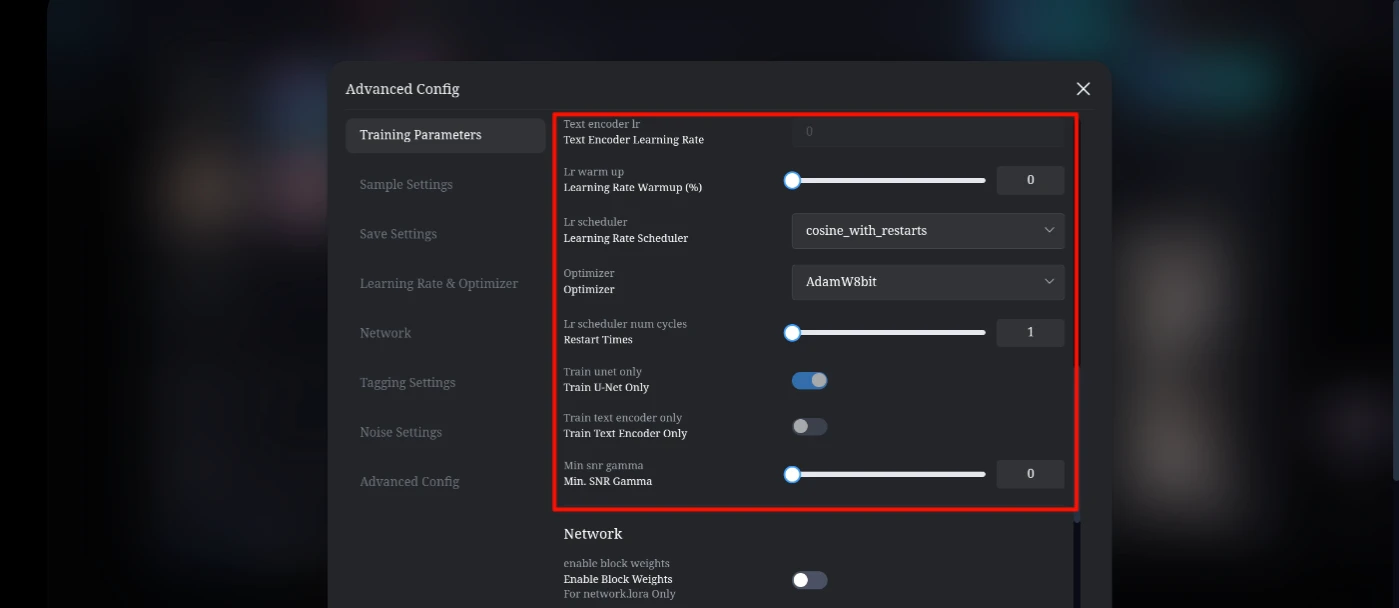

Text Encoder Learning Rate are "0".

I utilize the default Learning Rate Warmup (%).

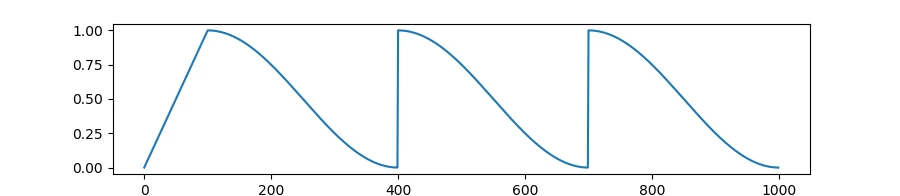

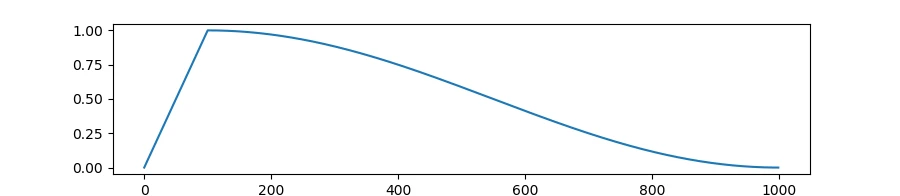

I utilize the Learning Rate Scheduler, specifically the Cosine with Restart and Constant with Warmup methods.

This graph illustrates the concept of Cosine with Restart.

This graph illustrates the concept of Constant with Warm-up.

I utilize the AdamW 8-bit optimizer, AdamW, and Prodigy. Prodigy is an excellent choice for beginners, as it does not require the adjustment of the learning rate.

Restart Times, Train U-Net Only, Train Text Encoder Only, Minimum SNR Gamma - I will use the default setting.

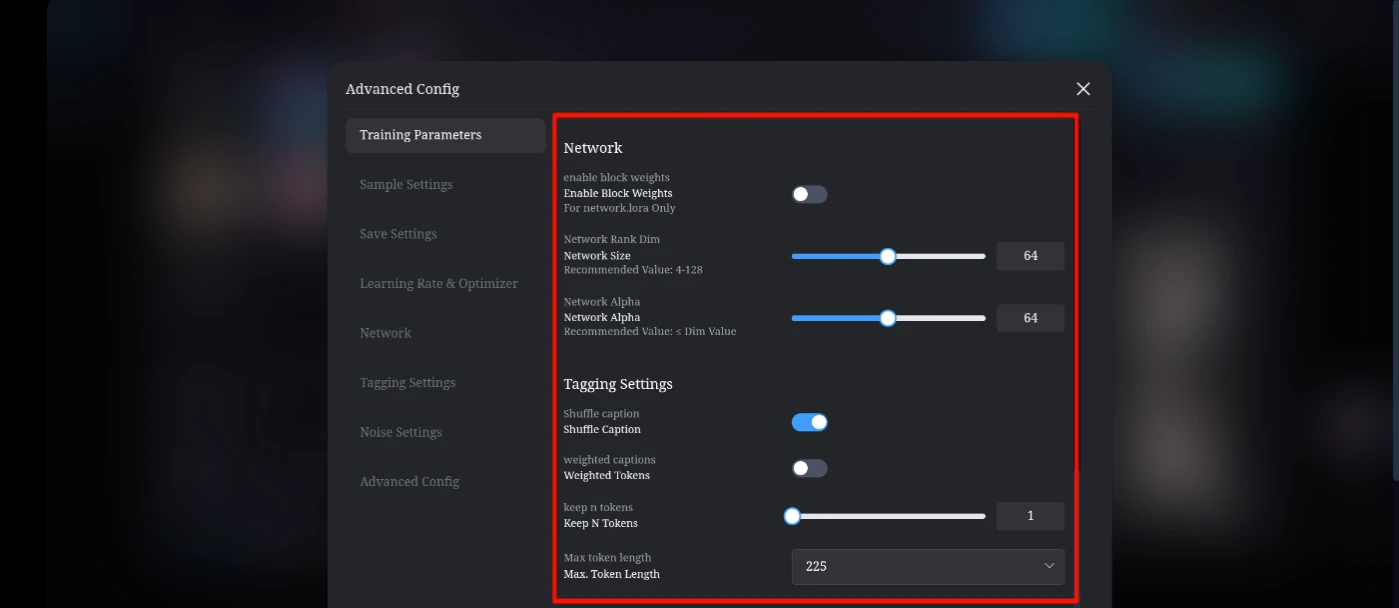

Enable Block Weight; I use the default settings.

Network Size (Dimensions): I utilize a size of 64, while the default for Flux is 2, which may not be sufficient for a complex dataset.

For Network Alpha, I utilize 64; however, you may consider increasing or decrease this value.

Shuffle Captions is enabled by default.

Weights token is disabled by default.

Keep N Token is set to the default value of 1.

The maximum token length is set to 225 by default.

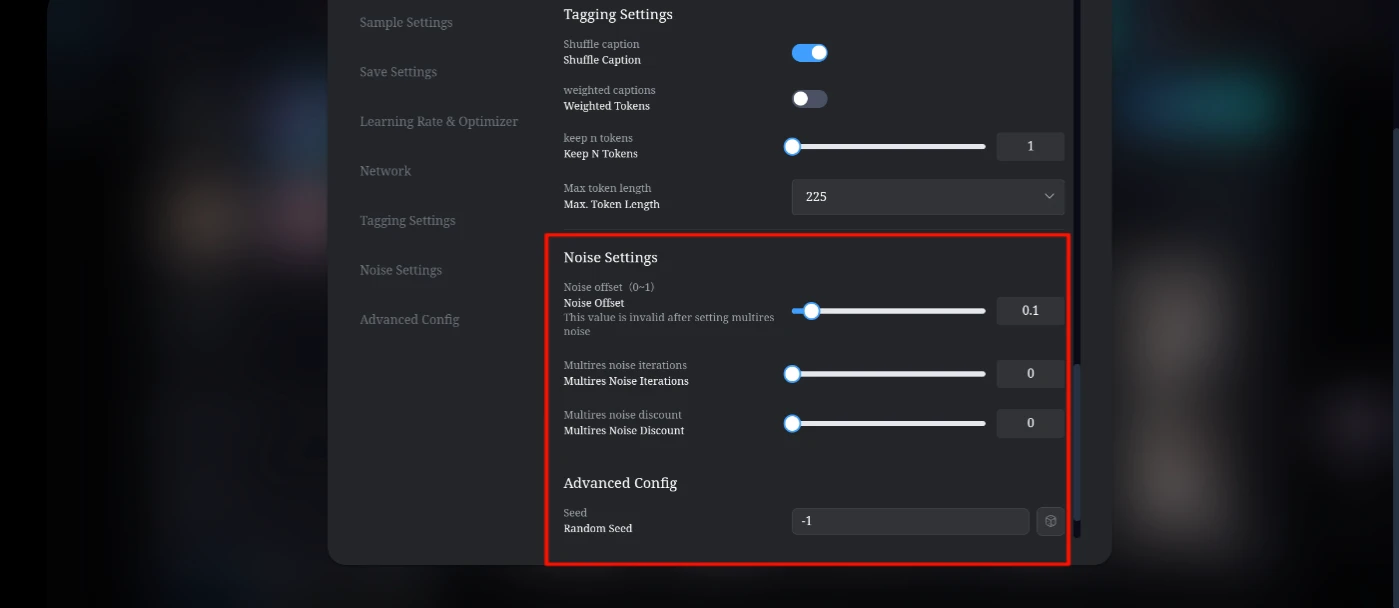

For the Noise Settings, I utilize the default option, and for Random Seeds, I set it to -1. If you prefer a fixed seed, you can change -1 to a seed of your choice.

After adjusting the settings, please exit the advanced settings tab and press the "Train Now" button.

The training time is approximately 1 to 3 hours, depending on your settings.

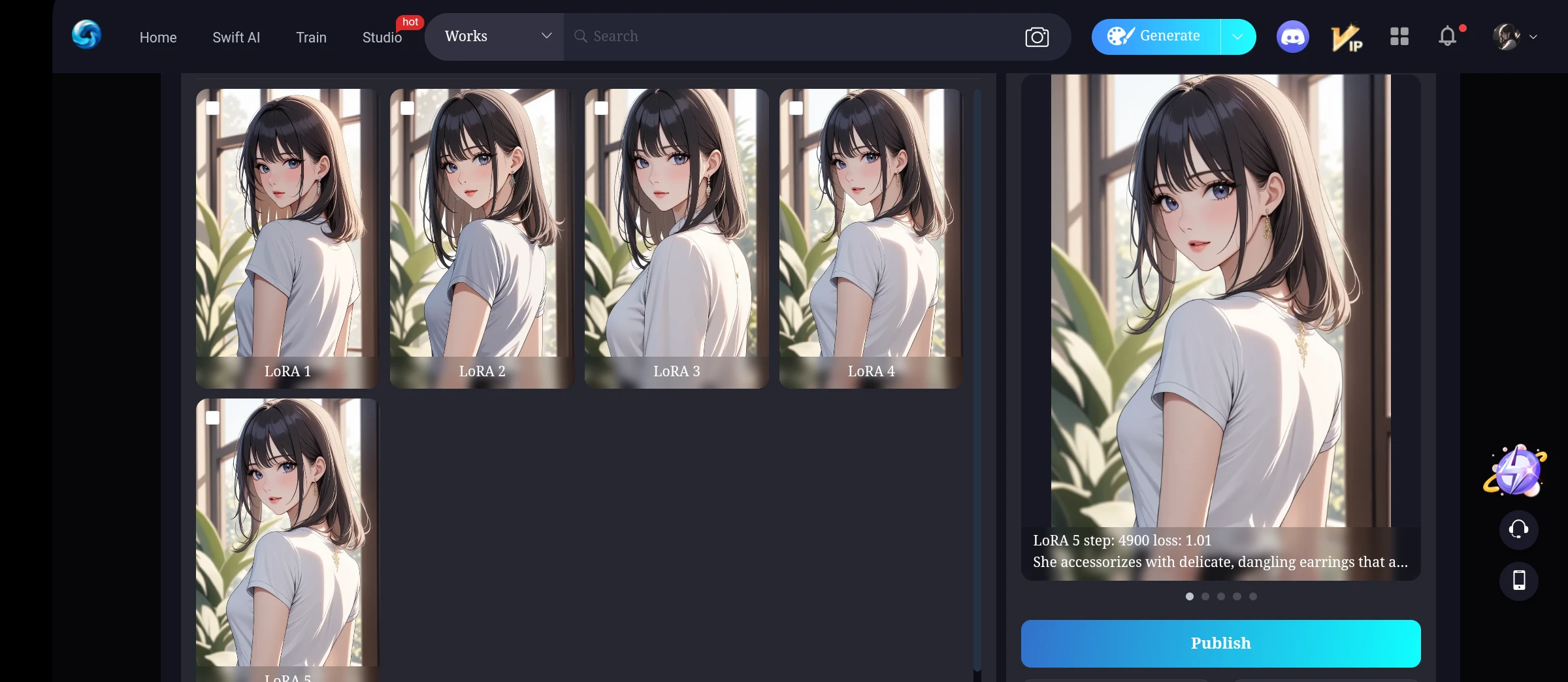

Upon completing the training, you may publish the LoRa by selecting the last number and conducting a test. If the results appear to be insufficient, please select the second-to-last LoRa number and proceed accordingly.

This is the result of my LoRa training. In my opinion, it still requires enhancement; however, I view this as a valuable learning experience that will facilitate future improvements.

Anime Tuning Flux

Conclusion:

Training LoRa is indeed straightforward; however, creating a masterpiece is quite challenging.

The settings I primarily use for training are available for your use. You may choose to adopt my settings or enhance them with your own, allowing us to learn together and improve our training of LoRa.

Thank you for taking the time to read my article. It may still require some improvements.