GPT-4o Mini Review: Fast but Limited

OpenAI has released a new model, GPT-4o Mini, claiming it will completely replace GPT-3.5 Turbo. Let's dive into what this new model brings to the table.

The main selling point of the GPT-4o Mini is its speed. OpenAI introduced this model with the promise of being faster and more efficient. On the performance front, GPT-4o Mini scores 82% on MMLU (Massive Multitask Language Understanding) and outperforms GPT-4 in chat-related benchmarks on the LMSYS leaderboard.

When it comes to pricing, GPT-4o Mini is significantly cheaper than previous state-of-the-art models. Its commercial price is $0.15 per million input tokens and $0.60 per million output tokens, making it more than 60% cheaper than GPT-3.5 Turbo.

Hands-On with GPT-4o Mini

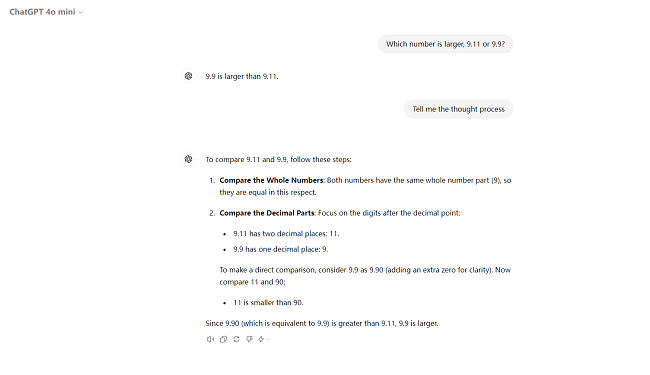

I tested GPT-4o Mini by asking it a popular question: which is larger, 9.11 or 9.9? GPT-4o Mini correctly answered that 9.9 is greater than 9.11.

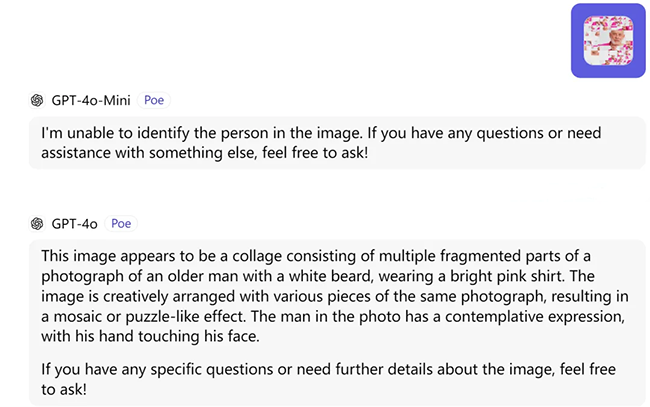

However, when I asked GPT-4o Mini to interpret the design cover of the biographical movie "Eno" through Poe (a Quora-developed app integrated with GPT-4o Mini), it failed. GPT-4o Mini simply stated, "I can't recognize the person in the photo."

In contrast, GPT-4o provided a detailed and accurate description: "This image appears to be a collage, with multiple fragments of a photo arranged creatively to produce a mosaic or puzzle-like effect. The photo depicts an elderly man with a white beard, wearing a bright pink shirt, looking pensive and touching his face."

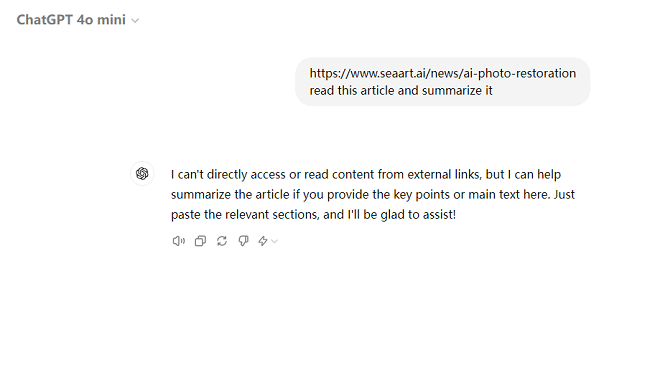

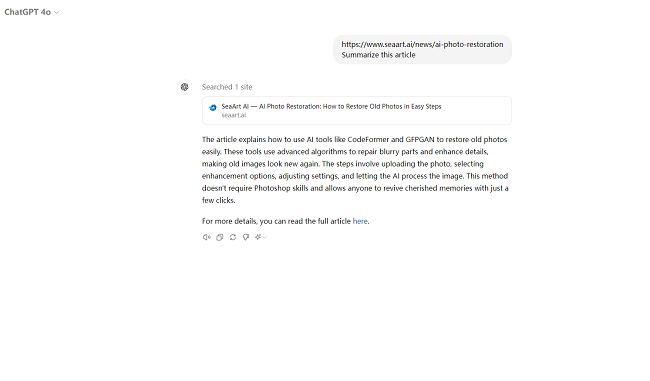

When summarizing text, GPT-4o Mini shows limitations. It cannot directly access or read content from external links, whereas GPT-4 can read and accurately summarize articles.

Speed Performance

GPT-4o Mini lives up to its claim of being "Faster for everyday tasks." Conversations with it are almost instantaneous, and its output speed is impressively rapid.

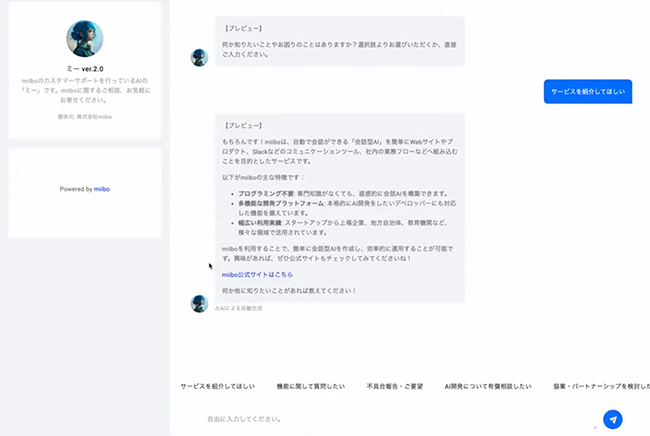

A Japanese user built an AI chatbot using GPT-4o Mini, and the response speed remained remarkably fast.

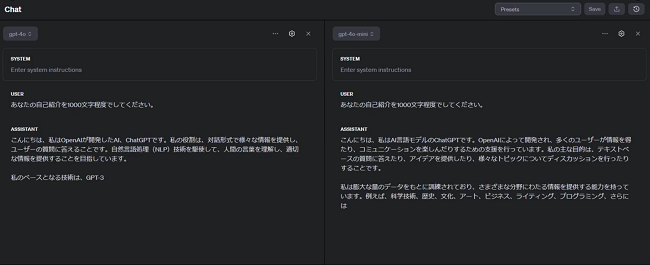

Another user compared the output speeds of GPT-4o and GPT-4o Mini, with GPT-4o Mini being noticeably quicker.

User Experience

While GPT-4o Mini excels in speed, the overall user experience may fall short in some areas. It's fast but might not always be as accurate or detailed as its larger counterparts.

Meet the Team Behind GPT-4o Mini

OpenAI has once again surprised the community with the release of GPT-4o Mini. This project is led by a group of young scholars, including several notable Chinese researchers.

The project leader is Mianna Chen, who joined OpenAI in December last year. She previously worked as a product manager at Google DeepMind. Chen holds a bachelor's degree from Princeton University and an MBA from the Wharton School at the University of Pennsylvania, earned in 2020.

Other key contributors include Jacob Menick, Kevin Lu, Shengjia Zhao, Eric Wallace, Hongyu Ren, Haitang Hu, Nick Stathas, and Felipe Petroski Such.

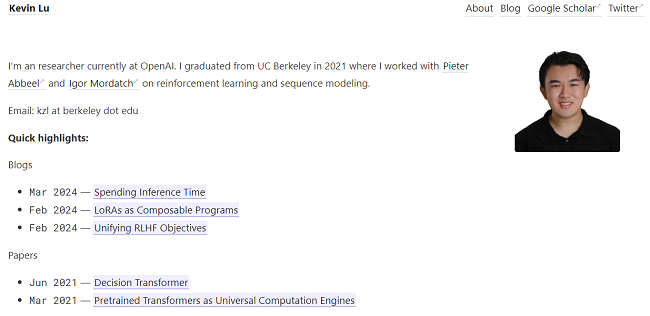

Kevin Lu, a researcher at OpenAI, graduated from UC Berkeley in 2021 and has worked on reinforcement learning and sequence modeling.

Shengjia Zhao, who joined OpenAI in June 2022, is a research scientist focusing on ChatGPT. He graduated from Tsinghua University and holds a Ph.D. from Stanford University.

Hongyu Ren joined OpenAI last July and is a core contributor to GPT-4o. He graduated from Peking University and Stanford University and has previously worked at Apple, Google, NVIDIA, and Microsoft.

Haitang Hu, who joined OpenAI in September last year, previously worked at Google and holds degrees from Tongji University and Johns Hopkins University.

The Trend Toward Smaller Models

With the release of GPT-4o Mini, many are wondering when GPT-5 will arrive. There is no official information yet, but it's clear that small models are becoming a new battleground.

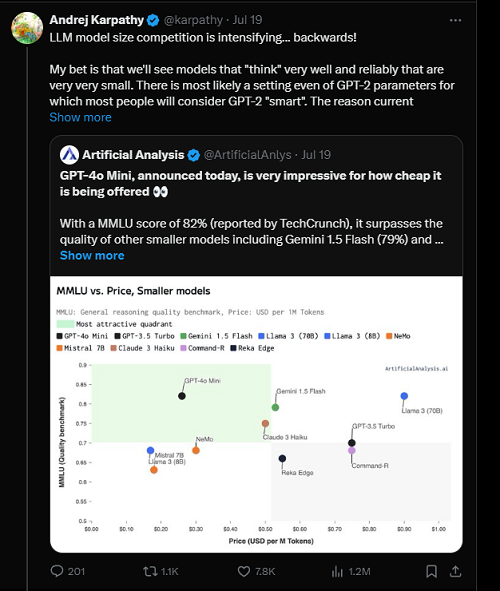

OpenAI founding member Karpathy notes that while the competition in LLM model size is intensifying, the trend is moving towards smaller models. He believes that smaller models can be highly efficient and reliable.

Karpathy suggests that current large models are like taking an open-book exam with the entire internet's content as the textbook. Future models might not need to memorize as much, instead think and reason more effectively. He envisions a future where small models, possibly even with GPT-2 parameters, perform exceptionally well due to improved training techniques and data curation.

In summary, GPT-4o Mini is a promising step towards more efficient and accessible AI models. Its speed is impressive, making it suitable for everyday tasks, though it may not yet match the depth and accuracy of its larger predecessors. The development of smaller, more intelligent models is an exciting trend to watch in the AI field.