CogVideoX 5B Review: Image-to-Video AI Model Overview and Setup

The CogVideoX 5B AI video model has introduced its latest image-to-video feature, transforming static images into motion with ease.

By leveraging advanced image encoding and motion generation, CogVideoX 5B enables users to create 6-second short videos locally without relying on cloud-based services. Though it may not yet match larger AI video models like Runway or Kling AI, CogVideoX 5B offers a promising step forward in AI video generation technology.

What is CogVideoX 5B?

CogVideoX 5B is an advanced image-to-video model that builds upon the text-to-video capabilities of its predecessor. The model uses image encoding to generate motions based on static images, allowing users to create AI-powered short videos with minimal setup.

How to Set Up CogVideoX 5B in ComfyUI

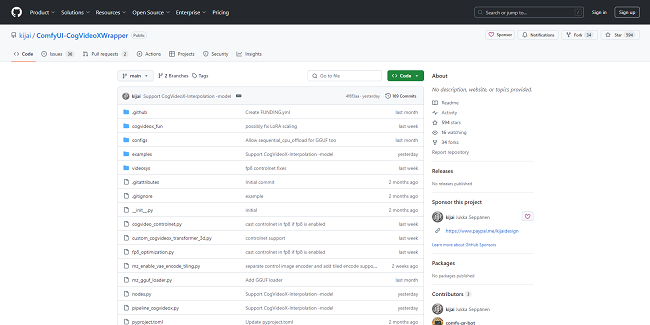

Setting up the CogVideoX 5B AI video model in ComfyUI is a straightforward process. Users can download the models from GitHub and integrate them into the ComfyUI workflow. Here are the key steps:

Step 1: Download CogVideoX 5B Models

Go to the GitHub project page and download the necessary models. If you already have T5x XL FP8 clip models, skip the encoder folder download to save space.

Step 2: Create CogVideo Subfolder

In the ComfyUI folder, create a subfolder for Cog Video X 5B models. Place the files in this subfolder.

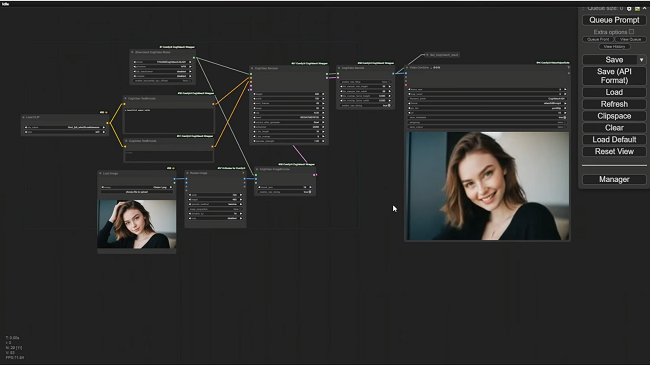

Step 3: Load and Configure the Model

Load a reference image in ComfyUI and ensure it is resized to 720x480, as CogVideoX only supports this resolution. Use the example workflow provided on GitHub to test the setup.

Performance of CogVideoX 5B

While CogVideoX 5B excels in generating short videos like smiles or basic movements, its quality is not yet comparable to larger AI models like Runway Gen-2 or Kling AI. However, the advantage of running CogVideoX locally with no cloud dependency makes it an appealing option for those looking for privacy and control.

Enhancing Video Output with Animate Diff Refiner

The Animate Diff Refiner can smooth motion and enhance detail in AI-generated videos. After using CogVideoX 5B, you can run the output through this refiner for improved visual quality.

CogVideoX 5B offers a powerful and locally runnable AI video model solution, perfect for short video creation with room for future improvements.